The structure of a C application directly influences how memory is allocated within a microcontroller’s memory map. Unlike a personal computer, which typically has gigabytes of RAM and storage, microcontrollers have far fewer memory resources. Most microcontrollers offer less than 128 kilobytes of SRAM and under 1 megabyte of flash memory for storing code.

Given these limitations, it is essential for firmware developers to understand how to use the available memory efficiently. This requires a solid grasp of how C applications are structured and how different types of data—such as global variables, stack, heap, and code—are placed in memory. Efficient memory usage not only ensures that the application runs correctly but also helps in optimizing performance and power consumption, which are critical in embedded systems.

Code Section

Let’s take a closer look at the code section of memory—this is the region where your executable program resides after being loaded onto the microcontroller. When you compile your C application, the compiler and linker generate a HEX file, which contains the machine code and other read-only data. This HEX file is what gets written into the code section during the flashing process. As your program grows in size—more functions, more logic, more constants—the HEX file grows too, and so does the amount of flash memory your application occupies in the code section.

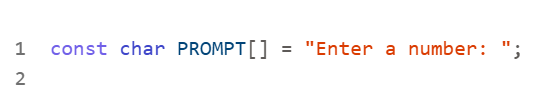

But the code section isn’t just for instructions like add, subtract, or memory access operations. It also stores read-only data, such as constants. Any variable marked with the const keyword is a candidate to be placed in this section, rather than in SRAM. For example, consider the following line of code:

Because PROMPT is declared as const, it doesn’t need to be stored in SRAM—it can live in flash memory, which is part of the code section. This is a more memory-efficient approach, especially on microcontrollers where SRAM is limited and precious. By placing constants in the code section, you free up SRAM for variables that actually need to change during runtime, helping your application run more efficiently within the constraints of the microcontroller.

Global Variables

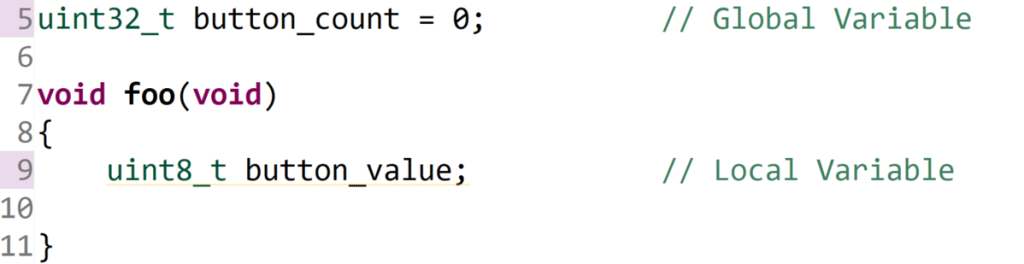

Global variables are a fundamental part of many embedded applications. These variables are declared outside of any function, making them accessible to all functions within the file in which they are defined.

Because a global variable can be accessed from any function, only one instance of a global variable with a given name exists in the entire application. If you need to access that variable from another file, you can use the extern keyword. This tells the compiler that the variable is defined elsewhere, allowing multiple files to reference the same global variable without redefining it.

Global variables are statically allocated in the Global Memory Pool, which resides in the Data section of memory. During compilation, each global variable is assigned a fixed address in SRAM. This ensures consistent access across all functions and files that reference it.

Static allocation is one of the simplest memory management strategies because the size and location of each variable are determined at compile time. However, this simplicity comes with a major limitation: you must know the exact memory requirements of your data ahead of time. In many embedded applications, this isn’t always possible. For example, the amount of data your system needs to store might vary based on user input, sensor readings, or communication payloads.

One common workaround is to over-allocate memory—reserving more space than you think you’ll need. While this might prevent immediate issues, it introduces two risks:

- Underestimation: Your guess might still be too small, leading to buffer overflows or data loss.

- Overestimation: You might reserve a large block of SRAM that is only partially used, wasting valuable memory resources.

In embedded systems with limited SRAM, unused memory is a lost resource. That’s why it’s important to balance safety margins with efficient memory usage, and to consider dynamic allocation (via the heap) when memory requirements are variable

Summary of Global Variables

| Feature | Description |

|---|---|

| Declaration Location | Declared outside of any function, typically at the top of a source file. |

| Uniqueness | Only one instance of a global variable with a given name exists in the entire application. |

| File Scope Access | Accessible from any function within the file where it is defined. |

| Cross-File Access | Can be accessed from other files using the extern keyword. |

| Memory Allocation | Allocated Statically from the Global Memory Pool |

| Address Assignment | Each global variable is assigned a fixed address at compile time |

Local Variables

Local variables are declared inside a function and exist only for the duration of that function’s execution. Once the function finishes, the local variables are returned to the stack, making them ideal for temporary storage.

One of the advantages of local variables is that their names can be reused in different functions without conflict. For example, you could have a variable named counter in five different functions, and each would be completely independent of the others. This is because local variables have function-level scope.

Local variables are allocated from the stack at runtime. Since the stack is typically small—often just a few kilobytes—you must be cautious when using local variables. Declaring too many, or having deeply nested function calls, can lead to a stack overflow. This condition can cause unpredictable behavior, such as program crashes or corruption of the global memory pool.

Summary of Local Variables

| Feature | Description |

|---|---|

| Declaration Location | Declared inside a function. |

| Lifetime | Exists only while the function runs; destroyed when the function exits. |

| Scope | Limited to the function in which they are declared. |

| Name Reuse | Can be reused in different functions without conflict. |

| Memory Allocation | Allocated from the stack at runtime. |

| Risk | Excessive use can cause stack overflow, leading to crashes or memory corruption. |

The Stack

Because local variables are stored on the stack, it’s important to understand how the stack works in a microcontroller environment. Most microcontrollers implement the stack using a dedicated register called the Stack Pointer (SP). This register always holds the address of the most recent allocation on the stack. As functions are called and return, the stack pointer is adjusted to allocate or deallocate memory accordingly.

The stack itself is allocated from SRAM, just like the global memory pool. However, the size of the stack is typically defined in the linker script or build settings used when compiling your application. This size is fixed at build time and does not grow dynamically.

When a function is called, it performs a run-time allocation by reserving space on the stack for its local variables. As your application runs, the stack expands and contracts based on the needs of each function call. Each time a function is called, memory is pushed onto the stack for local variables. This memory is automatically reclaimed when the function exits, meaning the function must return any stack space it used. This process is handled by the compiler and is invisible to the programmer. Because each function allocates and then releases memory from the stack, the same memory space can be reused by different functions throughout the application. This reuse is one of the key advantages of stack-based memory management—it allows for efficient use of limited SRAM.

In addition to storing local variables, the stack is also used for temporary storage during function execution. All calculations in the Cortex-M architecture use one of the 16 general-purpose (GP) registers to store intermediate results. However, these registers often contain important data that other parts of the application rely on. To maintain program correctness, assembly functions must not overwrite the contents of these registers unless they first save the current values.

To safely use a register, an assembly function must:

- Push (save) the current contents of the register onto the stack.

- Perform its operations using the register.

- Pop (restore) the original value from the stack before returning.

If a function declares too many local variables or if there are too many nested function calls, the stack can overflow—meaning it runs out of space. This can lead to unpredictable behavior, such as overwriting global variables or crashing the program. Stack overflows are among the most difficult errors to detect in embedded software development. They often occur at seemingly random times and can manifest in a variety of unpredictable ways—such as corrupted variables, unexpected resets, or erratic program behavior. The best way to prevent stack overflows is to write well-structured, memory-conscious code. This includes avoiding excessive use of local variables (especially large arrays) and minimizing deep or recursive function calls.

The Heap

Up to this point, we’ve discussed the Global Memory Pool and the Stack. The final section of SRAM we’ll cover is the Heap. The easiest way to think about the heap is that it consists of all the remaining SRAM after the global memory pool and the stack have been allocated. It’s a flexible region of memory used for dynamic memory allocation—memory that is requested at runtime, rather than at compile time.

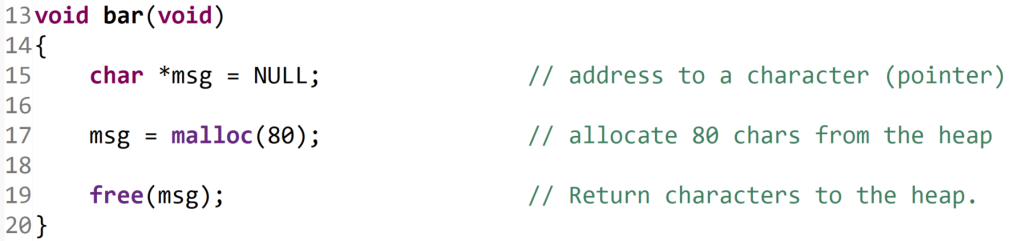

In C, dynamic memory is allocated using the malloc function, which is provided by the C standard library. The implementation of the heap can vary depending on the compiler and runtime environment, but conceptually, it consists of a list of free memory blocks, often managed using data structures similar to a linked list. When malloc is called, it searches through this list to find a block large enough to satisfy the request. Once found, it removes that block from the free list and returns a pointer to the starting address of the allocated memory.

When the memory is no longer needed, the memory MUST be returned to the heap using the free function. Failing to do so results in that block of memory being lost to the application since it will never re-enter the free list and cannot be allocated again. This is called a memory leak.

While it’s common to see malloc and free used within the same function, this is not required. The decision of when to free memory depends on the structure and lifetime of the data in your application.

While dynamic memory allocation offers flexibility, especially when memory requirements are not known at compile time, it comes with several important drawbacks.

- Memory Overhead: A portion of SRAM is used to manage the heap itself. This includes metadata such as block sizes and pointers (e.g.,

nextpointers in a free list). This overhead reduces the total amount of memory available for actual data storage. - Performance Cost: Allocating memory with

malloccan be computationally expensive. The allocator must search through the free list to find a suitable block, which can consume many CPU cycles—especially if the heap is fragmented or large. - Fragmentation: Over time, as memory is allocated and freed in varying sizes, the heap can become fragmented. This means that although there may be enough total free memory, it may be split into small, non-contiguous blocks. As a result, the system may be unable to satisfy a request for a large block of memory, even though enough space technically exists

Memory Allocation Summary

The information we’ve covered about the stack and heap is a high-level overview of two much deeper and more complex topics. In reality, each of these areas could easily span several weeks of coursework. Our goal here is to highlight that microcontrollers have limited memory resources, and the way we write software directly impacts how efficiently those resources are used.

If we were writing applications entirely in assembly, we would need to manage every detail of stack and heap behavior ourselves. Fortunately, we’re using the C programming language, which abstracts away much of this complexity. The C compiler automatically generates the necessary PUSH and POP instructions to manage the stack, and it also handles the underlying mechanisms of heap management.

This abstraction allows us to develop applications more quickly and safely, but it doesn’t eliminate the need for good programming practices. Even with C handling the low-level details, it’s still up to you to write memory-efficient code that respects the limitations of the microcontroller.

Best Practices: Memory Management in Embedded C

| Guideline | Why It Matters |

|---|---|

| Avoid allocating unused variables | Wastes valuable SRAM and can lead to inefficient memory usage. |

| Use global variables or heap for large arrays | Prevents stack overflow by keeping large data structures out of the limited stack space. |

Always check if malloc returns a valid pointer |

Ensures that memory allocation was successful before using the pointer. |

| Free heap memory when it’s no longer needed | Prevents memory leaks and keeps the heap available for future allocations. |

| Allocate enough SRAM for the stack | Prevents stack overflows, which can cause crashes or corrupt other memory regions. |